Brief Introduction

“Artificial Intelligence” – a term that, since the rise of deep neural networks, has shaken off its stuffy cobwebs of good old-fashioned AI — (GOFAI) which has become just that, old fashioned — has become the focus of research and development in companies and universities alike. With good reason, I might add (I won’t bore you with accolades or headlines from pop-science articles).

Mixed-in with those cobwebs of GOFAI is cognitive architectures. Born out of expert systems from from the GOFAI archives, cognitive architectures were (and still are) developed as a means of unifying research in cognitive science. The (rough) idea was that was to model human cognition in a common framework (architecture) so that theories from across Psychology, Neuroscience, and Linguistics could be unified within a single ontology. That hasn’t quite happened. Yet.

So why does AI (machine learning) and cognitive architectures belong in the same blog post? There are probably a bunch of reasons but I’m not going write them all down. The one I do want to talk about is:

At the end of the day, the AI consumer is us, the humans, the cognizers – and if we are going to work with/for AI in the (probably) not-too-distant future then we probably want a way to interact with them, to understand them, or communicate with them. And that brief intro is the motivation behind Explainable Artificial Intelligence – we need to understand why they do what they do, why they make the decisions they make. Alternatively, if we can make AIs that understand humans, then they would be more useful. In my research I build models of human cognition in applied contexts and applying them to AI is and has been a lot of fun.

One Problem, 2 Mental Models (Common Ground Modeling)

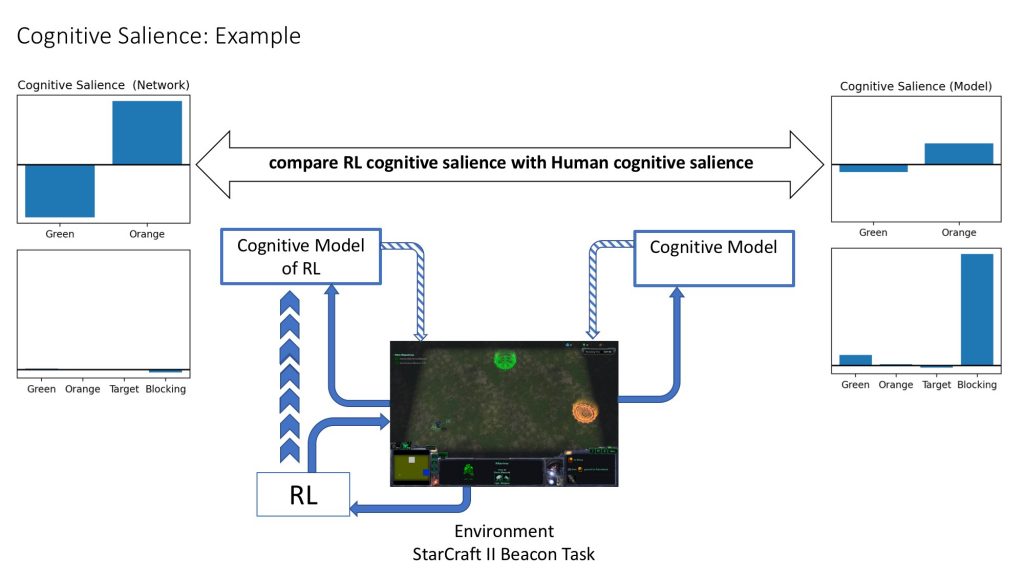

While many solutions for XAI are about introspecting upon the network (see this pdf), generating information about the internal states of the network only addresses half of the communication problem. Common Ground Modeling is about is about understanding the mental model of the AI and the mental model of the human (us!). In common ground modeling we model both the AI and the human in a common framework, and introspect upon both. An example of the output of the common ground salience technique is illustrated in Figure 1.

Why a Cognitive Model?

Let’s use the 2-beacon task in StarCraft II as an example. This mini-game represents a two-choice task either for the orange beacon (high reward, stochastic 1-5) or the green beacon (low reward, stochastic 1-3). The game requires a control element: moving the character to the beacon of choice by clicking the mouse at the desired location on the screen. Clicking on the screen will cause the character to move from its present location to the click location in a straight line. As soon as the character enters the zone of one of the targets, the round will end, and the reward will be given, and a new round is generated. The configuration of the beacons may require multiple clicks to move the character around one beacon in order to arrive at the alternate beacon.

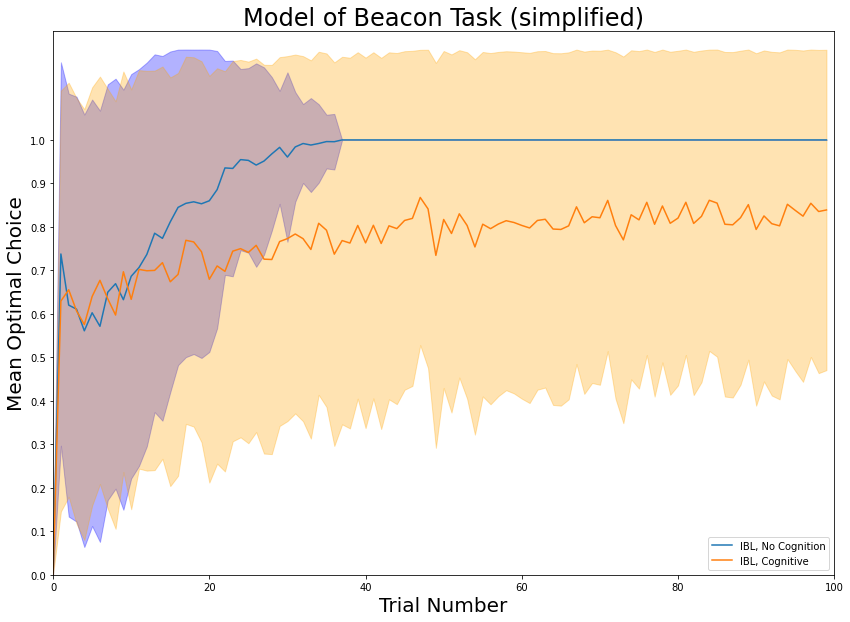

As it turns out, humans exhibit very reliable learning patterns in n-choice tasks with stochastic rewards and we can very reliably predict human performance with a cognitive model. In Figure 2 (below) we illustrate the how certain memory constraints (recency and frequency) affect human decision making. After a period of exploration, a perfectly rational player would choose orange beacon on every round. Although the orange beacon may randomly have a lower payout than the green beacon, it’s expected value is higher because on average it produces higher reward. The blue line in the figure shows how optimal the model would be without cognitive constraints responsible for recency and frequency effects, and the orange line in the figure shows the exact same model when memory retrieval is affected by recency and frequency.

As it turns out, there are a lot of predictable cognitive constraints that affect our decision making and task performance. Cognitive scientists develop and use cognitive architectures and cognitive models as scientific explanations of the human mind.

Common Ground Modeling

Ok, so we use cognitive models to predict human performance. Check. (to be continued…)