Problem

AI can learn to perform complex tasks (e.g. games).

Unlike traditional computer programs that do what they are engineered to do, modern AI (neural networks, deep neural networks) lean to make decisions through feedback, on their own. Although engineered to learn, we typically have very little insight into why they make the decisions that they make, once they are trained.

Goal

Develop techniques to explain decisions of an AI.

Approach

- Develop a reinforcement learner (AI) in a chosen domain

- StartCraft II,

- 3D Drone Simulation Gridworld,

- Strategy Gridworld Game

- Develop a common ground model

- Develop a cognitive model of human behavior in a chosen domain

- Develop of model of the reinforcement learner in same model framework

- Use the model as a explanation vehicle

My Tasks

- Engineering simulation environments

- Built 3D Drone Simulation Gridworld

- Built Gridworld Strategy game

- Develop common model

- Analysis

- Writing publishable papers

- Giving presentations at conference

1. Simulation Environments

Requirements

- Produce output

- Fast/Efficient (for training AI)

- Compatible with an existing drone simulator

- Human playable

Process

Drone Environment

- Consultation with Deep Neural Network expert

- Input/output specifications

- Computer science background research on programming libraries that operate efficiently

- Numpy-based

- Minimal loops and conditionals during runtime

- Feature and action space defined by existing drone simulator, for compatible design

- Prototyping cycle, with increasing complexity (increasing features, possible moves)

- Keyboard controls to test most functionalities before training (human playable)

- Train simple tasks and fix bugs and issues

Adversary Environment (strategy)

- Consultation with Deep Neural Network expert

- Input/output specifications

- Computer science background research on programming libraries that operate efficiently

- Numpy-based

- Minimal loops and conditionals during runtime

- Design for human and AI playability

- Generic “move” input for AI

- Keyboard input mapping

- Designed FLASK webserver for Amazon Web Servives (AWS) and Mechanical Turk (mTurk)

- Prototyping cycle, with increasing complexity (increasing features, possible moves)

- Keyboard controls to test most functionalities before training (human playable)

- Train simple tasks and fix bugs and issues

- Practice data logging to database for human data in on AWS serve

Outcome

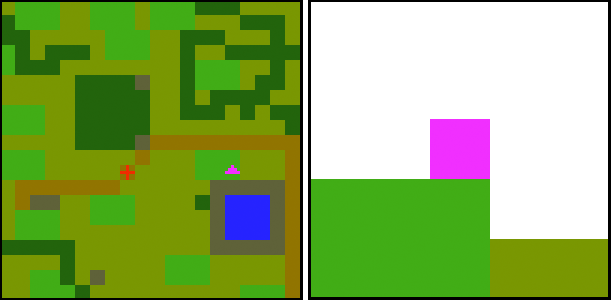

This figure is a screen-capture of the resulting drone environment. The allocentric view (left) depicts features of the environment. Pink triangle specifies drones, with color specifying altitude, and point indicating drone direction of travel. The red “+” indicates the target location, with surrounding features visible. The egocentric view (right) depicts the environment features in the direct neighborhood of the drone, specific to its direction of travel and unfolded to a flat, 2D input.

The figure is a screen-capture of a the resulting custom grid-world environment. The environment is turn-based and synchronous which focuses the research to strategy, as opposed to skill. This image depicts a simple game the requires the player to move their agent (orange) to the goal (green) while avoiding the adversary (red). There is an optimal strategy to exploit a weakness in the adversary in order to arrive at the goal every round.

2. Common Model

(under construction)